Rei Robotic Emotions Interface by Guorong Luo |

Home > Winners > #78707 |

|

|

||||

| DESIGN DETAILS | |||||

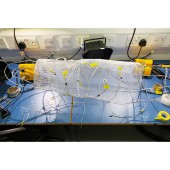

| DESIGN NAME: Rei PRIMARY FUNCTION: Robotic Emotions Interface INSPIRATION: According to experts’ prediction, by 2022, personal devices would know more about one's emotional state than their own family. But how about the other way around? Can humans actually understand emotions of robots? The wide use of robots will make robot a new species in the future, just like human beings, animals. And robots will have deeper interactions with human. We will feel more comfortable and have better communication with a species having emotions and feelings, rather than just machines. UNIQUE PROPERTIES / PROJECT DESCRIPTION: Since the interaction between robotic technologies in particular AI and human beings would get enriched by a certain immersion of emotions, we propose a multi-sensory interface expanding this interaction. Our prototype of a skin conveys between the robotic species and human empathy by addressing especially the olfactory as well as the visual, acoustic and haptic sensation. This multi-sensational experience will be used in the near future to support and improve the interface of AI. OPERATION / FLOW / INTERACTION: People can just simply put their hands on this skin-like interface and feel different emotions through multi-sensational experience provided by REI. Each emotion is represented by a certain smell scent, a certain haptic experience as well as a visual and acoustic expression. By receiving this kind of information, we can better understand robots' emotions and we will feel more comfortable and have better communication with a species having emotions and feelings, rather than just machines. PROJECT DURATION AND LOCATION: The project started in November 2018 in London and finished in November 2018 in London, and was exhibited in the Sense-ability exhibition in Royal College of Art, London. FITS BEST INTO CATEGORY: Interface, Interaction and User Experience Design |

PRODUCTION / REALIZATION TECHNOLOGY: Materials: Aluminium for supporting structure; Silicon for robot 'skin'; Arduino Components for providing scent, haptic, visual and acoustic expression. SPECIFICATIONS / TECHNICAL PROPERTIES: Size: Width 1000 mm x Depth 220 mm x Height 250 mm. TAGS: Interface, Robot, Emotion, Multi-sensory, Artificial Intelligence RESEARCH ABSTRACT: We conducted trend and background research on the psychology aspect of different senses, trying to find out the possible applications. And we also conducted interviews with leading perfume designers and scent makers to find out how to transform emotions into a sense of smell. After finished the scent model, I conducted user testing to modify the scent base on major people's preference. After getting a satisfactory result, we applied the same method to the combination of different sensory experience and gradually got the final interactive installation to demonstrated our concept. CHALLENGE: The main creative challenge is how to maintain consistency while designing multi-sensational experience. And it's really hard to design scents and tactile experiences for different emotions which are very abstract. So we did a lot of expert interviews and prototypes iterations. Finally, through addressing especially the olfactory as well as the visual, acoustic and haptic sensation, we open up the sensation of human and turn it into a whole body somatosensory. ADDED DATE: 2019-02-25 00:11:36 TEAM MEMBERS (5) : Guorong Luo, Moritz Dittrich, Janina Frye, Sushila Pun and Yiling Zhang IMAGE CREDITS: Image #1-5: Photographer Steven 2018 |

||||

| Visit the following page to learn more: http://www.grluo.com | |||||

| AWARD DETAILS | |

|

Rei Robotic Emotions Interface by Guorong Luo is Winner in Interface, Interaction and User Experience Design Category, 2018 - 2019.· Press Members: Login or Register to request an exclusive interview with Guorong Luo. · Click here to register inorder to view the profile and other works by Guorong Luo. |

| SOCIAL |

| + Add to Likes / Favorites | Send to My Email | Comment | Testimonials | View Press-Release | Press Kit |